项目背景

- 很久以前自学过深度学习框架TensorFlow学习与应用,并且实现里面的大部分代码,其中就包括有Google深度学习模型Inception v3,Incetption模型是谷歌基于开源项目Tensorflow的一款深度学习模型,能够识别千种以上的对象,并且进行过优化,适合迁移学习使用

- 刚刚给公司新买的GPU配置好Anaconda+Tensorflow_gpu环境

- 学校《图像处理》课程结束大作业就是公交车上的行人检测,要求统计实时乘客流量并且款选出每个人,越准确越好

下载Inception v3

Inception v3模型已经在项目背景给出,下载后存盘,我的路径是

1 | E:\Python\测试下载\tensorflow\inception_model |

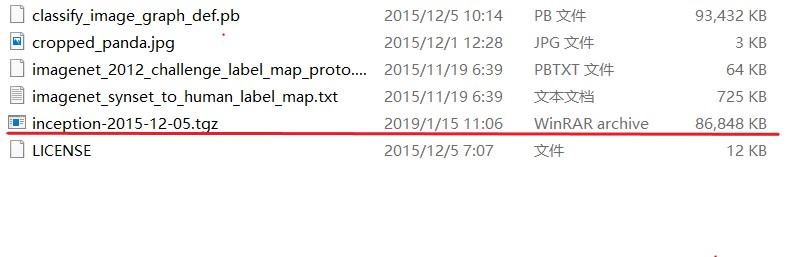

你只要存到你想要放置的位置就好,因为后面调用的时候会设置路径。文件夹里面是

迁移学习训练时候主要调用的就是这个tgz压缩包。

使用数据集VGG

进入VGG数据集,下载几个分类的图片,我个人下载了flower, airplane, guitars, animal and motorbikes,每个分类保存大概500张左右用于训练。里面图片类似这种

好了,现在我们有五个分类的文件夹,每个里面大概存有500张图片。

现在我们进行训练,参考项目背景中的视频,编写批处理文件retrain.bat,文件内容如下:

1 | python E:/Python/测试下载/tensorflow/retrain.py ^ |

第一行表示一个Python文件的存放位置,通过调用重新训练模型,文件内容太长就不贴了,地址在这;

第二行表示Inception v3的输出瓶颈位置,需要我们在批处理文件的目录下新建bottleneck文件夹,用于存放训练产生数据;

第三行表示训练次数,由你喜欢,可以来个一两百次,比较GPU无压力,我之前用cpu跑贼慢,跑完系统还崩了,然后模型测试结果也不对,不过神奇的是过了一天什么也没干过,结果用预测对了,简直玄学;

第四行表示之前下载的Inception v3的位置,给我们调用;

第五,六行表示将结果保存到批处理文件的目录下,是两个文件.pb和.txt,分别存放模型和标签;

第七行表示我们下载好的VGG图片,分为五类在五个文件夹内,文件夹名字均小写,且图片格式均为.bmp,否则会训练失败,这五个文件夹就保存在data/train/文件夹里面,data和train文件夹均是我们新建的,data文件夹存放在批处理文件目录下。

然后我们开始跑程序吧,点击retrain.bat然后等待几分钟,结果就是,嘣……

训练结果测试

不用贴图,训练成功你一定是看得出来的。直接从之前五类图片里面挑几个非训练图片出来用于测试,代码贴上来:

1 | import tensorflow as tf |

注意改下里面的模型.pb .txt和测试图片image的路径到你本地位置。然后就是见证奇迹的时刻:

从待检测视频中剪出样本用于训练

直接上代码

1 | import os |

如果纯手工裁剪图片不知道要猴年马月,用脚本后,正样本(含有检测目标人的)剪出500张花了大概两小时不到,因为开始代码没完善好….

负样本的话,如果人少,简直不要太快,十几分钟裁好五百多张。

训练分类

只放入正样本

一开始,没有制作负样本,就纯拿正样本放入之前的五个分类所在的文件夹,新建分类mypassengers,里面放入我的正样本图片,然后重新点击批处理文件训练,结束后进行测试,当然结果会很好。对含有样本图片和其他图片如吉他这种的区分明显,样本图片预测置信度在0.95以上。可能你会觉得可以直接用滑动窗口往网络里面塞图片进行测试了,但是当我把视频里面不含有人的部分也放到里面的时候,会发现是这样的:

额…忘记截图了,反正就是如果不把视频中不含有人的部分也放进去训练,模型也会认为它是目标,然后就没有做区分,这是相当于训练的时候并没有告诉模型需要对公交车内的人和其他物件如窗户椅子做出区分,所以需要制作负样本同样放进去一起训练。

多建一个分类放负样本

嘣~结果非常好,暂时就先不贴图了,后面再更,包括引入滑动窗口对整张图片做识别。

………………………..

好了,2019年5月13日下午,我又回来了。像我之前所说,同时截出正负样本进行训练,然后用滑动窗口复制出许许多多的测试图放入网络中测试。

滑动窗口对视频截图检测客流

滑动窗口

可以发现滑窗的原理和实现都不难,比较关键的一步是需要非极大抑制,直接贴网上找来用的代码如下,helper.py给后面的主程序调用

1 | # -*- coding: utf-8 -*- |

然后是滑动窗口slideMask.py

1 | # -*- coding: utf-8 -*- |

源码是在网上找的然后手动修改。

非极大值抑制并检测乘客

如果不加入极大值抑制,后面检测后的结果就很难看。极大值抑制函数如下,boxs是存放窗口box的list,box也是list,里面是窗口的左上角位置x和y以及该窗口的得分(预测置信度)。

1 | def py_nms(boxs, thresh=0.9, mode="Union"): |

此时,我对之前po出的训练结果测试的代码做了些修改,然后直接读图,整体测试代码如下

1 | # coding: utf-8 |

检测图片是类似这种:

当然,像所有论文作者一样,我只是贴出了效果比较明显的图片,其实如果不加入非极大值抑制,会得到如下结果

会有很多检测结果并不好,像下面图片,首先检测框款选的位置还是有点偏差,此外寸头男子就没有检测出来,倒是把手给框出来了,这是因为训练时候没有短头发的样本放进去….

此外最最重要的是,Inception V3的网络结构很大,而且并不是专门用于检测人流的网络,它检测客流就明显不具有实时性,基本只能检测几百张图片这样。如果存入太多帧图片到session中,会报错如下:

raise ValueError(“GraphDef cannot be larger than 2GB.”)

ValueError: GraphDef cannot be larger than 2GB.

会话不能加载太多图片,所以只能部分图片部分图片地读到会话中,而不能直接把视频读进去,这样处理虽然也可以得到最后结果,然后整合输出视频,但是实在是太慢。

结论

可以检测出人流,但是效果一般,而且实时性太差,所以转战yolo,想看的请出门走转。